MMLA in Debriefs

Multimodal Analysis of Student Cognitive and Emotional Responses during Team Debriefs

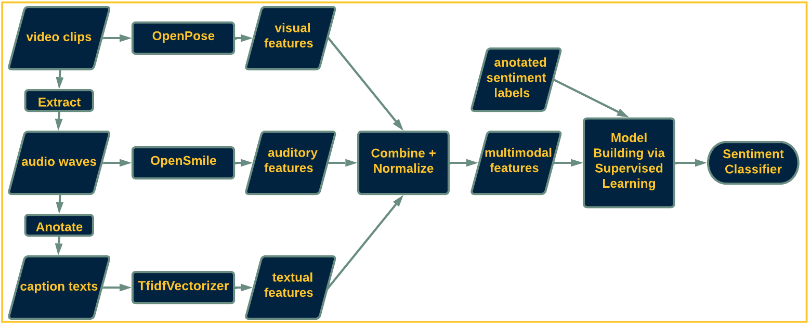

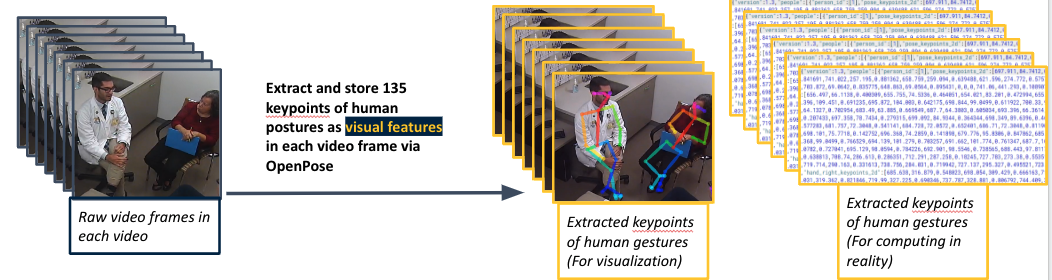

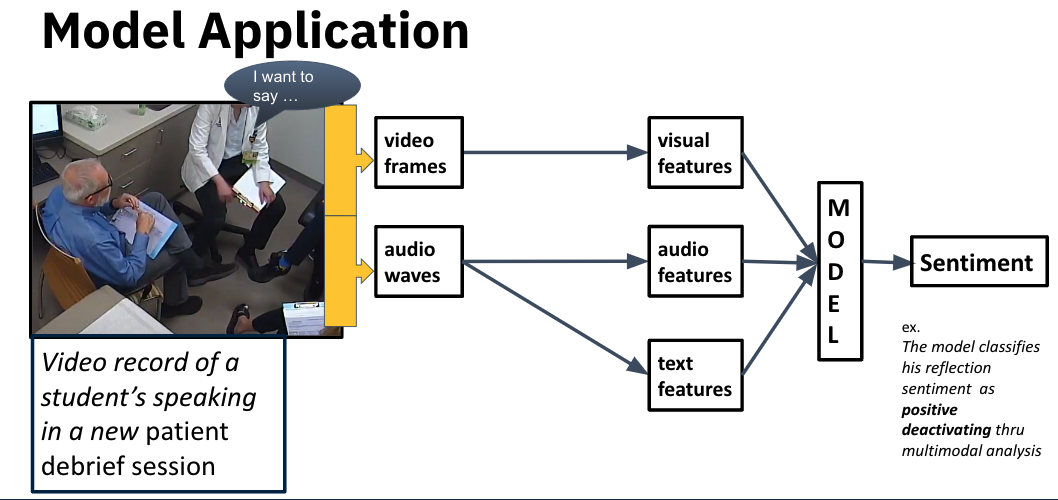

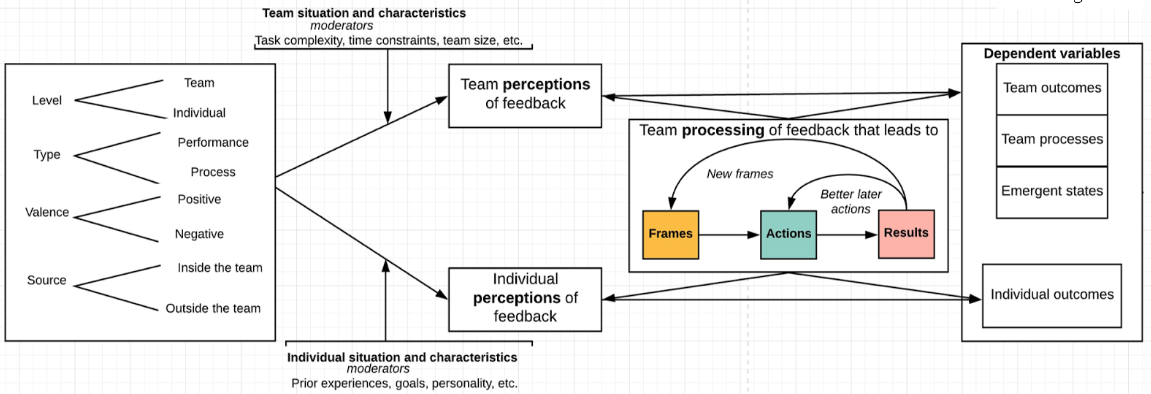

In the medical field, the technique of “breaking bad news” is incredibly important for future doctors and social workers to practice and receive meaningful feedback on. Our research team transcribed, analyzed, and annotated over 150 standardized patient simulation videos. A novel methodology was employed for multimodal sentiment analysis, which consists of gathering sentiments from available simulation videos by extracting audio, visual, and textual data features as inputs for multimodal modeling. Then, these data streams were used to predict and classify a trainee’s emotional states when receiving feedback. Overall, the goal is to optimize the feedback delivery and reception in order to prepare future medical professionals for the critical task of delivering bad news.

Guiding Research Questions:

(1) How do medical and social work students reflect, perceive and process information cues contained in feedback during BBN debrief sessions? (2) Can we leverage machine learning to build a sentiment classifier, so we can reliably predict in near-real time student engagement in the debrief process?